Rethinking Design in the Age of GenAI

/ 11 min read

Quick heads up: This piece draws from my experience with tools like Midjourney (AI image generation), Cursor (AI-powered code editor), Bolt (no-code platform), and Lovable (design tool). I’ve built and shipped three revenue-generating products with these tools over the last 6 months and have some evolving thoughts on role of design. While you don’t need to be familiar with these tools to follow along, knowing them helps appreciate the parallels I’m drawing about where design tools are headed.

As I spend time with AI code generation tools, I keep returning to the question of developers’ future role. Tools like Cursor and Bolt have PMs building stuff on their own. Claude’s highest API usage is for code, and many industry leaders predict more than 70% of code will be AI-generated by year’s end. Developer careers seeme at stake.

Yet I’m working with developers more than ever before. We ship faster, which makes us more ambitious. Small startups now compress quarterly roadmaps into sprints. But there’s something brewing on the side – this velocity unlock means all teams must match software development’s new pace. And there’s one department where GenAI hasn’t made its foray, failing to keep up.

The Missing Piece: Why Text-to-Design Lags Behind

Figma has ruled as the design world’s standard for years. They famously took five years to launch out of beta, perfecting their product with early testers. While Figma presumably perfects their Text-to-Design model, the rest of the world has moved on. We live in a strange moment where “text to everything” exists except text to design. We have LLMs for Text-to-Image, Text-to-Video, Text-to-Code, Text-to-Music, Text-to-3D assets – but no Text-to-Design. This has significantly handicapped designers’ velocity compared to other teams unlocking GenAI benefits.

The reason is fascinating. Other creative modalities have commonly accepted formats not owned by anyone. Images have jpg and png – open source formats readable by many tools. Same with code (.js files) and video (.mp4). Design, however, has been driven by private companies (Adobe, Figma) with minimal democratization. There’s no widely available software to open these files, limited open source community, and no standard accepted formats. This means LLMs cannot create or edit a ‘.fig’ file. And this has handicapped the design generation.

Fascinatingly enough, LLMs went directly into generate code, and design started becoming a by-product of that.

While product design awaits its AI breakthrough, I think it’ll be useful to take a look at how image generation tech evolved and use that as parallel to how product design will evolve.

I’ve divided the rest of this into 3 chapters:

- Chapter 1: The First Design Revolution: Mid-journey

- Chapter 2: Converge of Code & Design

- Chapter 3: How companies look at Design

Chapter 1: The First Design Revolution: Mid-journey

Midjourney, a leading AI image generation tool, has fundamentally transformed the graphic design workflow by allowing users to create stunning visuals through text prompts rather than manual design work. This shift represents the first wave of AI’s impact on design.

The workflow of Graphic Design is as follows:

- You get a creative brief from marketing manager or your creative head.

- You use tools like Photoshop/Illustrator to create the output. (All the stuff about layers, paint brushes, masking etc)

- You get the final image output.

With the advent of midjourney, creators skipped step 2 and went directly from Step 1 to Step 3. While a small minority of design purists might argue that you don’t have full control, a large population is just stoked at whats possible when you have removed the skill barrier.

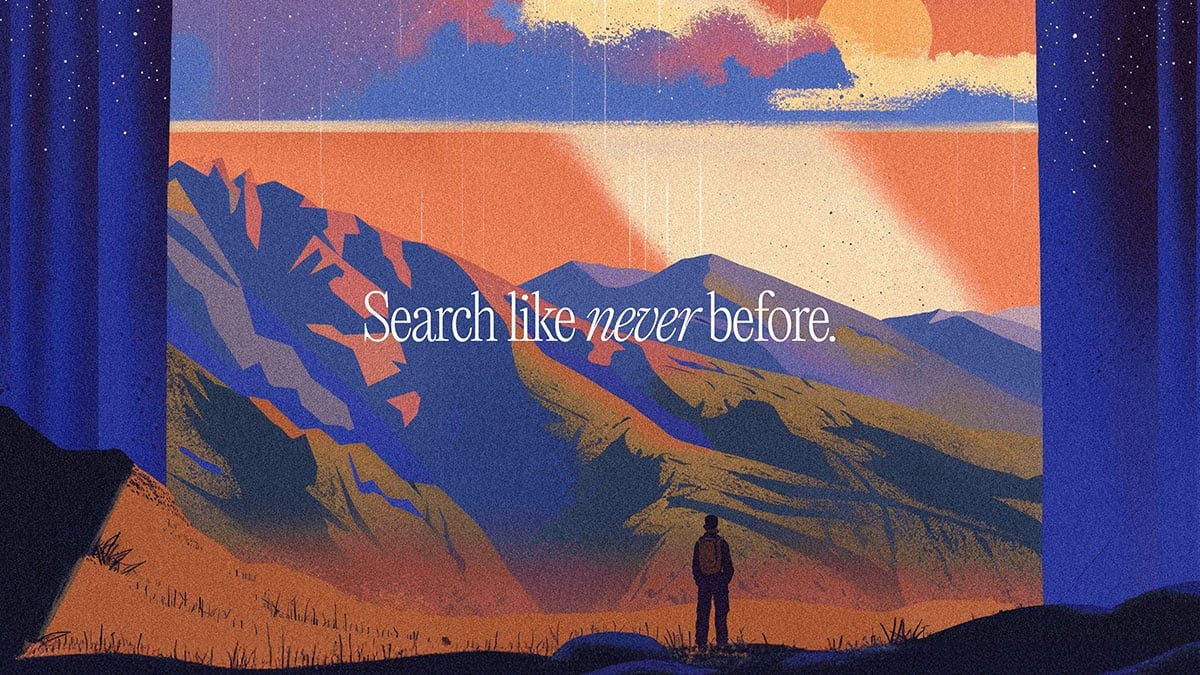

Take the image below as an example. An output of a prompt that was 3 lines long. An equivalent is photoshop takes about 3 days for senior professional illustrator.

What midjourney unlocked is freedom from specific tool knowledge.

This revolution has now enabled everyone to be a graphic designer. I expect this to multifold once high quality image generation comes natively in Gemini/ChatGPT.

This democratization of image creation has strong implications for how businesses approach design resources. The shift from technical expertise to prompt crafting has created new roles and workflows. Companies like perplexity have adopted Midjourney images as part of their brand guidelines. Below is a tweet from brand team at Perplexity.

Images that would otherwise takes weeks are now a few minutes away.

Closer home, many now hire Midjourney specialists.

Studying Midjourney also gives us a good preview of the pace of advancements happening in models. Below is a comparison of images generated by Midjourney for the same prompt across last 6 versions. v1 to v6.

Evolution of Midjourney Image generation.

Adding a high resolution of image for v6.1

It took midjourney 18 months to go from the first image to the last one. Acceleration is here and fast happening.

I want to highlight an important point here though. When you can generate anything, what you choose to design matters more. Your understanding of textures, patterns, colors, and typography becomes crucial. Taste matters more than ever. And hence, while midjourney enabled anyone to be a designer, its adoption is still with designers who are able to come up with right prompts, evaluate results, tweak them in software and use it for production applications. The best results of midjourney require you to have taste.

My takeaways from this:

- Specialised tool knowledge has become redundant for most usecases. People go from text to final output, skipping the in between steps.

- Everyone is now a graphic designer. If you want to be able to generate a beautiful painting for your wall featuring memories of items that you really care about (watches, shoes, music you love), you can really prompt it out. There’s no question about it.

- Velocity of graphic design has improved 10x and this has implications on creative team sizes going forward.

- Designing is now more about taste, clarity of thought & judgement.

These changes in graphic design foreshadow what’s coming to product design. As we’ll see in the next section, the boundary between design and implementation is already beginning to blur in more complex UI environments.

Chapter 2: Beyond the Handoff: Convergence of Design and Code

While Midjourney revolutionized static image creation, product design presents a more intricate challenge. It’s not just about visual aesthetics - it involves complex interaction patterns, state management, and technical implementation. However, the emergence of AI code generation offers an interesting shortcut: instead of trying to solve the ‘design-to-code’ translation problem, what if we could skip the intermediate design phase entirely?

LLMs are rapidly improving at creating basic UIs that junior designers struggle with. They create these interfaces at incredible speed, potentially eliminating the separate design step entirely – going from idea directly to code.

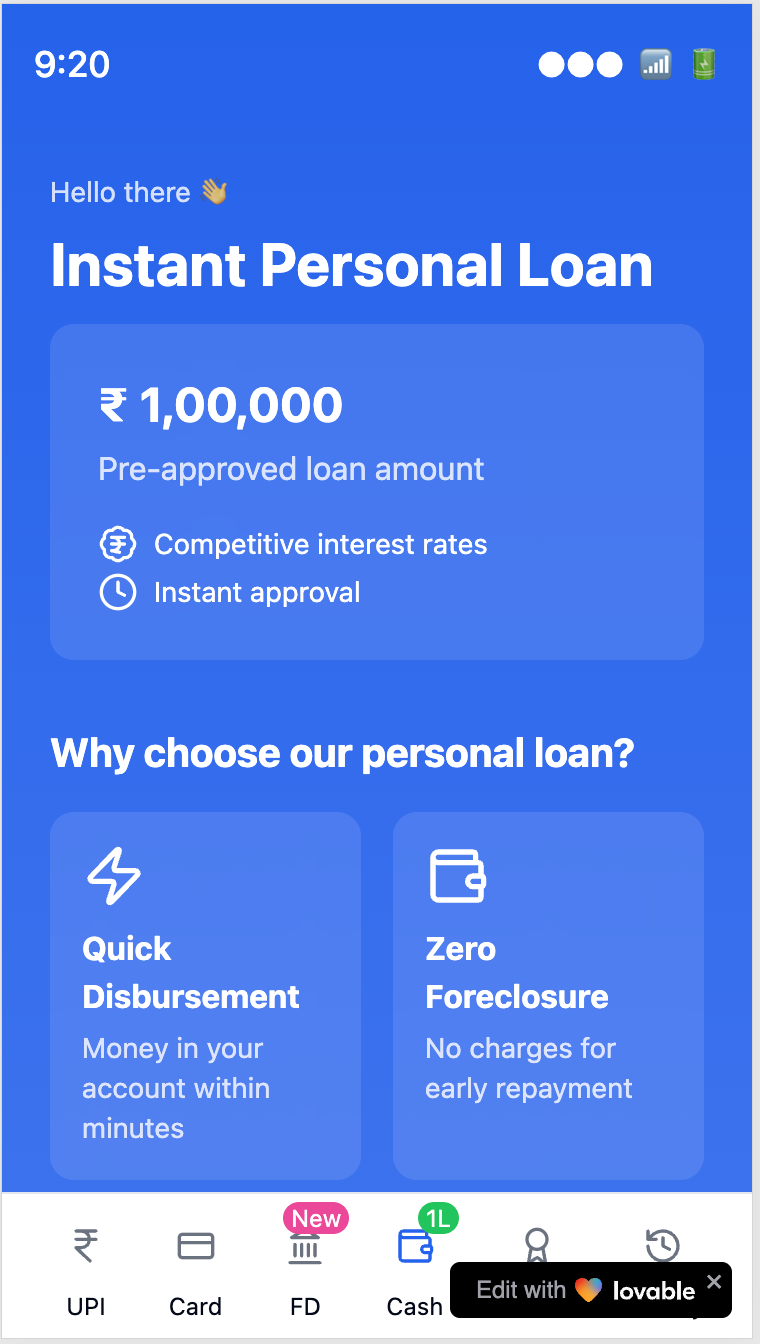

Below is an example from community of a semi end-2-end journey of a loan feature created by the designer, while watching a cricket match!

I would argue that these designs are near to what a junior designer develops or maybe even better.

If you look at the second screen, you would notice that it got quite a few things right:

- The information hierarchy is clean.

- The hero card has relevant icon, copy, title, description, tags. The opacity for description is aldo correct.

- The amount card has the right copy and emphasis on imp information.

- Smaller details like rounded corners maintain consistency across all cards

More importantly, the WhatsApp screenshot above shows the liberation that PMs feel when they are able to think & express product flows directly as end consumer experiences instead of writing them as documents imagining stuff in air.

These AI capabilities force us to reconsider a fundamental question about our current workflows: Why was design ever a separate step? Why build the same thing twice – once in Figma defining fonts and spacing, then having another team painstakingly copy those details into code? Design on Figma is a transient state that exists between document and shipped product. It loses any relevance after the product is shipped. The transition from figma to code is also messy and inefficienct. There’s always a drop-off between what was designed and insane amount of time & coordination needed between teams to come together to make pixel perfection happen. This separation exists today because of technological limitations, not because it’s a desired process.

The ideal state would be a designer designing a screen, then an engineer directly wiring it to backend. Designers would feel more control if their designs shipped directly without frontend engineers meddling. Product leaders would welcome reduced shipping time, and engineers could focus on integrations, APIs, and performance instead of pixel perfection.

As AI tools improve, the artificial separation between design and implementation becomes increasingly difficult to justify.

Chapter 3: How Companies Invest in Design

While the technical capabilities for design-code convergence are emerging, adoption will vary across different business contexts. To better understand this, we can look at how different types of products value design in their overall strategy. A company’s investment into design is primarily influenced by - how much does design influence final buying decision of my customer?

Each product category has a different threshold at which design matters:

- Trust-Based Products When we look at the UI of any traditional banking application - they suck. There are lot of neobanks or new age banks that have adopted great design. Yet none of people in my circle who are tech savvy or design appreciators have adopted them as their primary account. Why? Because banks are in the business of selling trust. When a user is trying to decide which bank account to open, he thinks about who else in his family has that account, proximity of nearest branch, interest rate. Doesn’t even cross the mind for users to check the UI UX of banking portal.

- Efficiency-Driven Products (PhonePe, Zepto): Zepto had a terrible design for the first 2 years of launch (rightly so, they were very very early in their journey), but the company spent and focused most of their time on logistics and getting 10 minute delivery right. The consumer here was buying delivery reliability.

- Identity-Based Products (Fashion, Luxury): Design is fundamental from day one as it directly represents the core value proposition.

This business context explains why, despite the exciting possibilities of AI-generated design, adoption will be uneven. Companies will embrace design-code tools at different rates based on their category: efficiency-driven products will likely be early adopters to reduce costs and speed up development, while trust-based products may be more conservative.

A very important observation here is that once companies have achieved parity on other parameters, design plays a very large role in decision making. All other things alike, product & design make or break the company. Ex: In a GenAI company, what works most is the model. If you LLM sucks, design can’t save it. But between equal LLMs, differentiated design & product itself could be a moat.

A company thats working on building navigation system for Marine Engineers needs a great functional UI but won’t necessarily sell its software because of design.

For a lot of companies that are trying to find PMFs, solving workflows, todays LLM to code is a very good option to get velocity and reduce cost.

The oncoming of a new design-engineer

- Midjourney showed that the game has changed from knowing photoshop to knowing how to prompt.

- Onset of LLM to code means that we can finally skip intermediate representations and designers can finally start working on final shipped screens.

- And companies that are non-design first or early in their journey can use LLMs to directly go to final code.

The future of design software will be a platform where designs create designs through prompts. You’ll have a canvas that feels like design software but writes code underneath. This is an important distinction to make and getting this right is the holygrail.

The designer can just prompt “Make three variations of this header component” and it will generate them. You can publish directly, creating relevant components for engineers to integrate with APIs. Once you design, there’s no redoing it in code.

There is a white space opportunity where Figma adapts to work well with code or a new software gets created that provides designers the flexibility & creativity they need while generating code outputs.

The new opportunity

The convergence of design and code through AI represents a fundamental shift in how digital products will be created. This isn’t just about efficiency gains but about reimagining the entire process from ideation to production. The billion-dollar opportunity I envision is a platform that provides designers the creative flexibility they need while simultaneously generating production-ready code.

This new paradigm will require new skills – an understanding of design principles, prompt engineering, and enough technical knowledge to guide the AI effectively. But the rewards for companies that adopt this will be substantial: dramatically faster iteration cycles, more cohesive products, and the ability for small teams to build what previously required entire departments.

I’m excited to see this transformation unfold and believe it will ultimately lead to better digital experiences for everyone. The question isn’t if this convergence will happen, but how quickly we can adapt to and shape this new reality.